Speaker Recognition

Azure Speaker Recognition lets you identify speakers or use speech to authenticate users. For more information, see Azure Speaker Recognition.

Use the Speaker Recognition action to either identify or authenticate a speaker.

Complete the following steps to use the Speaker Recognition action in your workflow:

1. Drag the Speaker Recognition action under Azure to the canvas, place the pointer on the action, and then click  or double-click the action. The Speaker Recognition window opens.

or double-click the action. The Speaker Recognition window opens.

2. Edit the Label, if needed. By default, the label name is the same as the action name.

3. To add an Azure connector type, refer to Supported Azure Connector Types.

If you previously added a connector type, select the appropriate Connector Type, and under Connector Name, select the connector.

4. Click TEST to validate the connector.

5. Click MAP CONNECTOR to execute the action using a connector that is different from the one that you are using to populate the input fields. In the Runtime Connector field, provide a valid Azure connector name. For more information about MAP CONNECTOR, see Using Map Connector.

If you selected Connector Type as None, the MAP CONNECTOR option is not available.

6. In the Select Specific Service list, select one of the following options, and do the following:

◦ Select Verification and do the following:

a. In the Resource Group list, select the appropriate resource group defined under your Azure subscription.

b. In the Speaker Account list, select the appropriate Speaker Recognition account.

c. In the Verification Profile ID list, select the profile ID from the Speaker Recognition account.

d. In the Audio File field, map the output of a previous action to provide the path to an audio file.

◦ Select Identification and do the following:

a. In the Resource Group list, select the appropriate resource group defined under your Azure subscription.

b. In the Speaker Account list, select the appropriate Speaker Recognition account.

c. In the Audio File field, map the output of a previous action to provide the path to an audio file.

d. Under the Speakers group, in the Identification Profile ID list, select the profile ID from the Speaker Recognition account.

Click Add to add multiple profile IDs. Click  to delete the profile IDs.

to delete the profile IDs.

7. Click Done.

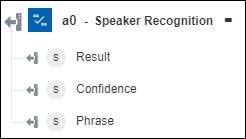

Output schema

The output schema for the Speaker Recognition action returns the result with the confidence level and phrase.

For Verification, the Result is either Accept or Reject, and the Phrase is the sentence that the speaker speaks.

For Identification, the Result is the ID of the person. If the Speaker Recognition API does not find a match among the profile IDs, it returns a null value as the Result. The action returns a null value for the Phrase field.