ThingWorx Deployment Architectures

The following sections include diagrams of typical ThingWorx deployments. The architectures range from simple development systems to multi-node clusters, to global federated production systems. For information about ThingWorx components, see ThingWorx Foundation Deployment Components.

|

|

To deploy ThingWorx in hybrid and multi-site deployments, refer to Distributed ThingWorx Deployment.

|

|

|

It is recommended to have ThingWorx Server and Azure PostgreSQL Flexible Server at the same geographical location.

|

Deployment Options

• PTC Cloud Services - In a managed-services deployment the ThingWorx application is hosted and managed on a third-party server - often a private cloud. An outside organization is responsible for managing the necessary infrastructure and ensuring performance.

For companies concerned with the IT burden and expertise required to manage ThingWorx, PTC provides a managed-services deployment option. With PTC Cloud Services, companies purchasing ThingWorx can accelerate deployment, minimize IT cost and requirements, and ensure ongoing performance. PTC Cloud Services hosts your ThingWorx solution in a secure environment within commercial cloud services that has ongoing application management, performance tuning, and updates. For more information, see www.ptc.com/services/cloud.

• On-Premise Deployment - Using an on-premise deployment means installing and running the ThingWorx software on servers in your own site or data center. You are responsible for procuring, installing & maintaining the infrastructure and software applications as well as their on-going support in terms of health, availability and performance.

With an on-premises deployment, you can perform the deployment yourself or engage PTC Professional Services (or a PTC-certified partner) to manage the deployment. This option is suitable for companies with a robust IT organization and a strong desire to maintain in-house control.

Refer to the following sections for additional details:

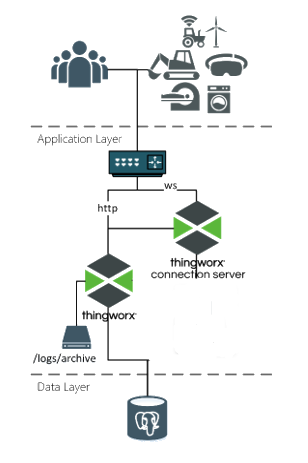

ThingWorx Foundation Basic Production System

For a basic production system, a separate server recommended to operate the database on a separate server. This is a good small-to-medium-sized enterprise system, or a medium-to-large manufacturing system.

List of Components | Number of Components |

|---|---|

Load Balancer | 1 |

ThingWorx Connection Server | 1 |

ThingWorx Foundation Server | 1 |

Attached or NAS File Storage | 1 |

Database | 1 |

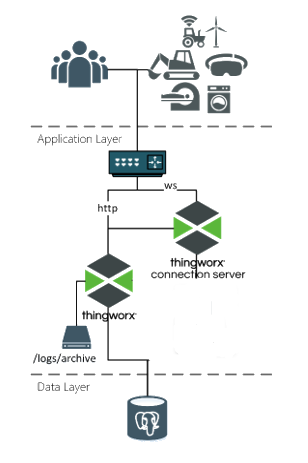

ThingWorx Foundation Large Production System (non-HA)

A large production system incorporates additional components to support higher numbers of connected devices and high data ingestion rates.

In addition to the platform components, a large production system may include ThingWorx Connection Servers and an InfluxDB time-series database.

The InfluxDB system ingests data coming from the assets, which ThingWorx manages as logged value stream content.

A relational database is still required to maintain the ThingWorx model.

InfluxDB can be deployed in either single-node or multi-node (Enterprise) configurations based on ingestion and high-availability requirements. Refer to Using InfluxDB as the Persistence Provider for additional details.

List of Components | Number of Components |

|---|---|

Load Balancer | 1 (distributes device traffic to connection servers) |

ThingWorx Connection Server | 2..n (depends on number of devices) |

ThingWorx Foundation Server | 1 |

Relational Database | 1 |

InfluxDB (single-node) | 1 |

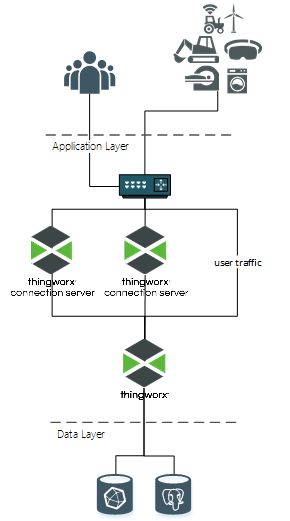

ThingWorx Production Clusters

For a high-availability (HA) deployment, additional components are added to remove single points of failure in the Application and Data layers. The following components are required for the ThingWorx platform:

• A high-availability load balancer. The Connection Servers and Foundation Server node groups both require a load balancer instance to distribute load. An Influx Enterprise cluster would also require an instance if used. Many load balancer options are able to serve multiple instances – a single appliance could be used to serve all three instances if configured appropriately.

• Two (or more) ThingWorx Connection Servers. In clustered operations, Connection Servers are required in order to distribute device load across the cluster, or to re-distribute if a node failure occurs.

• Two (or more) ThingWorx Foundation instances. Each node is active - load will be distributed between them.

• ThingWorxStorage is on-disk storage that is shared (accessible by each node).

For a complete high-availability deployment, load balancers and shared ThingWorxStorage should also have redundancy implemented. |

• Apache Ignite nodes to provide shared cache for the ThingWorx Foundation nodes.

• Three Apache ZooKeeper nodes. ZooKeeper monitors ThingWorx nodes to decide whether each one is responsive and functioning as expected. The ZooKeeper nodes form a quorum and decide when a ThingWorx node is offline. If a ThingWorx Foundation node goes offline, ZooKeeper reconfigures the ThingWorx load balancer to direct traffic to the other Foundation nodes.

The following components are required for the PostgreSQL database:

• PostgreSQL server nodes - a minimum of two nodes, ideally three, are used.

• pgpool-II nodes - a minimum of two, ideally three nodes, that perform failover and recovery tasks in the event of a PostgreSQL server failure. These nodes maintain connections between client (ThingWorx) and servers (PostgreSQL) and manage replication of content among PostgreSQL server nodes.

The following components are required for the InfluxDB Enterprise system:

• InfluxDB Enterprise Meta nodes – A three node setup is recommended so that a quorum can be reached and to allow the cluster to continue operating if one node fails.

• InfluxDB Enterprise Data nodes – Can be one, but a minimum of two is advised. A node count that is evenly divisible by your InfluxDB replication factor is recommended.

For additional details about deploying ThingWorx in a high-availability environment, see ThingWorx High Availability.

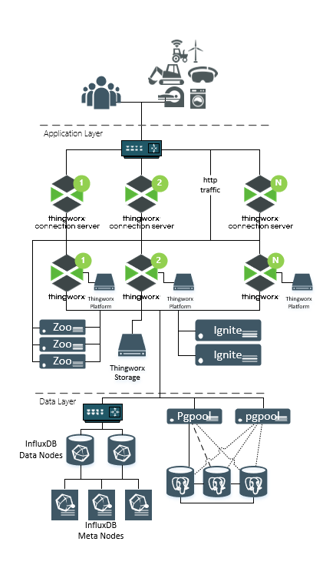

High Scale + High Availability Cluster

In this diagram, each component of the cluster resides in its own physical machine, virtual machine, or container. This provides the most flexibility in terms of scaling each of the different component groups independently.

List of Components | Number of Components |

|---|---|

ThingWorx Connection Server | 2..n (based on device count) |

Load Balancer | 2 or 3 instances: • Route device traffic to connection servers • Route traffic between ThingWorx nodes • Route traffic between InfluxDB Enterprise data nodes (if used) |

ThingWorx Foundation Server | 2 .. n: based on high availability and scalability requirements |

Networked/Enterprise Storage | Disk space for ThingWorx storage repositories shared with all ThingWorx Foundation servers. |

Ignite | Two options: • Embedded within Foundation processes • 2 or more separate nodes (depends on HA requirements) |

ZooKeeper | Minimum of 3. Should be in odd-numbered allotments. |

Database | Depends on database: • PostgreSQL: 3 database nodes + 2 pgpool-II nodes • MS SQL Server (not pictured): minimum 2 as part of a failover configuration. |

InfluxDB Enterprise | 5 (or more): • 3 Meta nodes • 2 or more Data Nodes, total count evenly divisible by replication factor |

Minimum Cluster Footprint

In this diagram, components are packed or grouped onto a smaller set of physical/virtual machines, or containers.

This configuration can be used for high availability but will be less scalable when compared to a distributed configuration where components share resources.

This diagram can also be used to look at deployments where multiple, independent ThingWorx deployments leverage the same shared infrastructure (ZooKeeper, DB, Network Storage).

List of Components (Per Cluster Node) | Number of Components |

|---|---|

ThingWorx Connection Server | 1 (per cluster node) |

ThingWorx Foundation Server | 1 (per cluster node) |

Ignite | None - Running in embedded mode within each Foundation Server process. |

List of Components (Shared) | Number of Components |

|---|---|

Load Balancer Instances | 2 or 3 instances: • Route device traffic to connection servers. • Route traffic between ThingWorx nodes. • Route traffic between InfluxDB Enterprise data nodes (if used). |

ZooKeeper | Minimum of 3. Should be in odd-numbered allotments. |

Databases | See previous diagram. |

Networked/Enterprise Storage | Disk space for ThingWorx storage repositories shared with all ThingWorx Foundation servers. |