Issues with Threads

The following are some of the scenarios that indicate performance or other stability issues in your ThingWorx solution:

• Many blocked threads for a long period of time

• Shared resource contention

• Long running threads

This section provides overview of these types of issues.

Blocked Threads

Many blocked threads for a long period of time indicate high resource contention at the JVM level or a potential deadlock. When numerous threads are blocked, and are waiting for a shared resource, for example, database access, users are generally blocked from performing operations.

High contention sometimes resolves itself. For example, when a large database write operation is complete. However, if the threads remain blocked for a long period of time:

• There may be a deadlock situation, that is, threads lock each other in a circular pattern.

• Or, there may be a single blocking transaction, which does not complete processing in the required time

You can check for this issue by:

• Searching for number of blocked threads.

• Check if the thread that is blocked in one snapshot remains blocked in the subsequent snapshots.

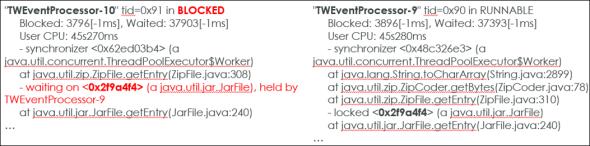

The following image shows a blocked thread:

In this example, the unzip operation in TWEventProcessor-10 is blocked. It is waiting for another unzip operation to complete in the TWEventProcessor-9 thread. Two users could be unzipping the files at the same time in ThingWorx, and the second user has wait for the first one to finish the operation. Both the operations are accessing object 0x2f9a4f4 which is locked in TWEventProcessor-9.

If many event processor threads are also blocked, there can be bigger server stability issues. Additionally, the Event Processing subsystem may show an increase in the number of active threads. It also shows a growing queue of unprocessed events if the blocked scenario does not resolve quickly.

Shared Resource Contention

The database and event threads in ThingWorx can be a performance bottleneck for the following reasons:

• Check the contention for C3P0PooledConnectionPoolManager and TWEventProcessor group of threads. A large number of blocked threads or threads actively working in these categories may indicate a potential performance bottleneck.

• There may be events queued up on the server if all the database and event threads are occupied.

Consider the following example. In this case, an event thread blocks all the database connector threads. The event thread 11 in the call stack is performing a large database update operation.

"C3P0PooledConnectionPoolManager[identityToken->2v1py89n68drc71w36rx7|68cba7db]-HelperThread-#7" tid=0xa0 in BLOCKED

Blocked: 414717[-1ms], Waited: 416585[-1ms]

User CPU: 5s320ms

at java.lang.Object.wait(Native Method)

- waiting on <0x56978cbf> (a com.mchange.v2.async.ThreadPoolAsynchronousRunner), held by TWEventProcessor-11

"C3P0PooledConnectionPoolManager[identityToken->2v1py89n68drc71w36rx7|68cba7db]-HelperThread-#6" tid=0x9f in BLOCKED

Blocked: 415130[-1ms], Waited: 416954[-1ms]

User CPU: 5s530ms

at java.lang.Object.wait(Native Method)

- waiting on <0x56978cbf> (a com.mchange.v2.async.ThreadPoolAsynchronousRunner), held by TWEventProcessor-11

Blocked: 414717[-1ms], Waited: 416585[-1ms]

User CPU: 5s320ms

at java.lang.Object.wait(Native Method)

- waiting on <0x56978cbf> (a com.mchange.v2.async.ThreadPoolAsynchronousRunner), held by TWEventProcessor-11

"C3P0PooledConnectionPoolManager[identityToken->2v1py89n68drc71w36rx7|68cba7db]-HelperThread-#6" tid=0x9f in BLOCKED

Blocked: 415130[-1ms], Waited: 416954[-1ms]

User CPU: 5s530ms

at java.lang.Object.wait(Native Method)

- waiting on <0x56978cbf> (a com.mchange.v2.async.ThreadPoolAsynchronousRunner), held by TWEventProcessor-11

The logic of the custom services performing the operation can be optimized as shown in the following example:

"TWEventProcessor-11" tid=0x92 in RUNNABLE

Blocked: 3913[-1ms], Waited: 37924[-1ms]

User CPU: 45s430ms

at com.thingworx.persistence.common.sql.SqlDataTableProvider.updateEntry(SqlDataTableProvider.java:13)

at com.thingworx.persistence.TransactionFactory.createDataTransactionAndReturn(TransactionFactory.java:155)

at com.thingworx.persistence.common.BaseEngine.createTransactionAndReturn(BaseEngine.java:176)

Blocked: 3913[-1ms], Waited: 37924[-1ms]

User CPU: 45s430ms

at com.thingworx.persistence.common.sql.SqlDataTableProvider.updateEntry(SqlDataTableProvider.java:13)

at com.thingworx.persistence.TransactionFactory.createDataTransactionAndReturn(TransactionFactory.java:155)

at com.thingworx.persistence.common.BaseEngine.createTransactionAndReturn(BaseEngine.java:176)

Long Running Threads

Long-running threads indicate that the custom code in your ThingWorx solution may need optimization. Typical user transactions complete quickly at the JVM level, in subsecond or a few seconds range. However, a long-running thread, for example, running for 10+ minutes, involving a custom code can indicate a problem. Gather several thread snapshots over a 10-minute interval to see how long an operation takes to execute on a specific thread.

For example, a capture during a 60-minute interval shows the same execution pattern on the same thread:

http-nio-8443-exec-25" tid=0xc7 in RUNNABLE

Blocked: 750[-1ms], Waited: 8866[-1ms]

User CPU: 1h35m

- synchronizer <0x35153c2f> (a java.util.concurrent.ThreadPoolExecutor$Worker)

at com.thingworx.types.data.sorters.GenericSorter.compare(GenericSorter.java:93)

at com.thingworx.types.data.sorters.GenericSorter.compare(GenericSorter.java:20)

at java.util.TimSort.countRunAndMakeAscending(TimSort.java:360)

at java.util.TimSort.sort(TimSort.java:234)

at java.util.Arrays.sort(Arrays.java:1512)

at java.util.ArrayList.sort(ArrayList.java:1454)

at java.util.Collections.sort(Collections.java:175)

at com.thingworx.types.InfoTable.quickSort(InfoTable.java:722)

…

Blocked: 750[-1ms], Waited: 8866[-1ms]

User CPU: 1h35m

- synchronizer <0x35153c2f> (a java.util.concurrent.ThreadPoolExecutor$Worker)

at com.thingworx.types.data.sorters.GenericSorter.compare(GenericSorter.java:93)

at com.thingworx.types.data.sorters.GenericSorter.compare(GenericSorter.java:20)

at java.util.TimSort.countRunAndMakeAscending(TimSort.java:360)

at java.util.TimSort.sort(TimSort.java:234)

at java.util.Arrays.sort(Arrays.java:1512)

at java.util.ArrayList.sort(ArrayList.java:1454)

at java.util.Collections.sort(Collections.java:175)

at com.thingworx.types.InfoTable.quickSort(InfoTable.java:722)

…

In the example, the thread is performing a sort on an infotable. The sorting operation continues while iterating through each item in a large infotable. The operation can be optimized to occur once at the end. Long running operations create contention either at the memory level, the database level, or on other server resources. Therefore, identifying and addressing long-running services in your custom ThingWorx solution is important.