Interpreting Prediction Processor Output

The format in which prediction results are output depends on the prediction processor and the type of job. Batch scoring jobs, from the Asynchronous Prediction custom process, output a job ID which can be used in the Scoring Results processor to retrieve results in a CSV format. Real Time scoring results, from the Synchronous Prediction custom processor, are output directly to your pipeline in a specific JSON format.

Regardless of the format, the interpretation of the columns of data included in the results is the same. In both cases, batch scoring and real-time scoring, the specific columns in the output vary according to the parameters you configure for the scoring processor. The following types of columns can be included in prediction results, whether from batch or real-time processing:

• Identifier Fields – These fields can be added to a scoring process to help identify specific results. They are output in the results with the parameter names you configure in the scoring processor.

• Predicted Goal – The predicted value of the goal variable for a specific result record. This column represents the predicted value after the model output has been denormalized or transformed.

• Model Output – The PMML score for the predicted goal before the value is denormalized or transformed. A suffix of _mo is attached to the column name to identify it as the raw model output score. The meaning of the model output information varies based on the OpType of the goal variable:

◦ Boolean – The model output score indicates an estimated probability that the value is true. However, in several scenarios this value cannot be considered a true probability.

Depending on the model type, you might see _mo values that are greater than one or less than zero. An output transformation converts this value to a predicted goal of true or false.

◦ Continuous – The model output score is simply a normalized goal value. Because the underlying model trains on normalized data, the scoring process outputs normalized prediction scores. An output transformation denormalizes this value to report a predicted goal using the original scale.

◦ Categorical – The model output score reports an estimated probability for each available category. As with Boolean data, the model output score may not represent true category probabilities.

Depending on the model type, you might see _mo values that are greater than one or less than zero. An output transformation selects the most probable category as the predicted goal value. This OpType is not available for use in Analytics Builder.

◦ Ordinal – For model training purposes, an ordinal goal (such as xs, s, m, l, xl) is treated like a continuous variable. The model output score represents a normalized version of the goal value. An output transformation converts this value to a predicted goal value using the original ordinal categories. This OpType is not available for use in Analytics Builder.

• Important Fields – The important fields represent which fields have the most influence on the value of the goal variable in each result row. The number of important fields returned is determined by the value you enter in the IMPORTANT_FIELD_COUNT parameter when you configure the scoring processor.

• Important Field Weights – For each important field, a field weight represents the relative impact of that field on the goal variable. If the field weights for all the fields in one set of training data could be added together, the sum would equal 1. In the sample results below, the weights for the important fields across each row sum up to something slightly less than one.

• Error Message – This column displays any errors that occur while trying to score a specific row of data.

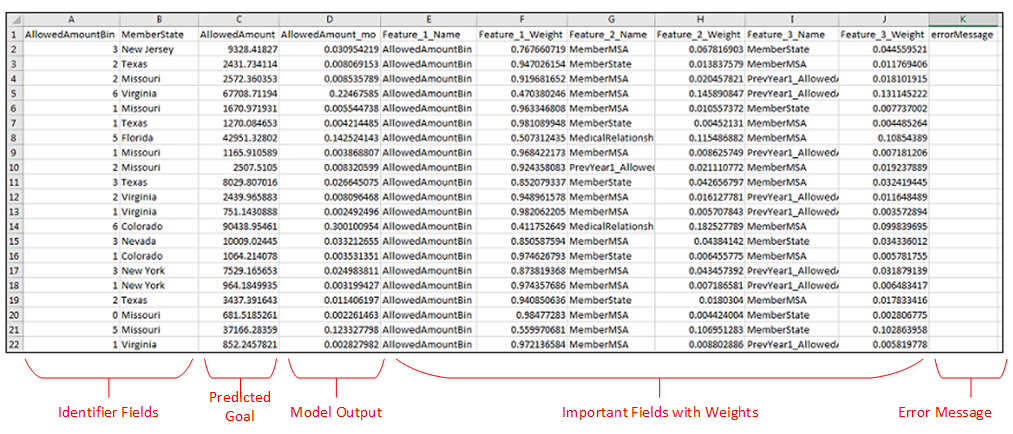

Sample – Batch Scoring Results

The following sample results were output from an asynchronous predictive scoring job as a CSV string. The scoring job included two identifier fields and three important fields. In the figure below, the results have been opened in an Excel spreadsheet.

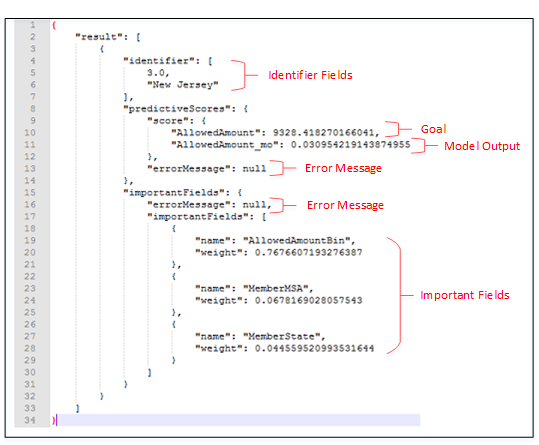

Sample – Real-time Scoring Results

The following sample results were output from a synchronous predictive scoring process as a JSON file and returned to a DataFlow pipeline. The scoring process included two identifier fields and three important fields.