Anomaly Detection Architecture in a ThingWorx Cluster

In ThingWorx cluster mode, Anomaly Detection functionality requires installation of both Analytics Server and Platform Analytics. Anomaly Detection must be able to leverage Property Transform components, which are available only from the Platform Analytics installer.

Standalone vs. Cluster Mode

Why does Anomaly Detection in a ThingWorx cluster require the Property Transform components? When Anomaly Detection is deployed on a standalone server, a new anomaly monitor is generated each time you create an anomaly alert on a Thing property. The anomaly monitor begins monitoring changes to the Thing property and builds property-specific historical data, in memory. This data is used to calculate whether or not new data points are anomalous.

This approach breaks down when Anomaly Detection is deployed in a cluster of ThingWorx servers. In cluster mode, a given property can be updated by any server in the cluster. An anomaly monitor on one server cannot monitor properties updated on another server. The key to resolving this issue is to move the anomaly monitor calculations outside of ThingWorx so that each server in the cluster can send property updates to a central location.

Property Transform already includes all of the components necessary to receive streaming data from ThingWorx, perform calculations, and return results. With a few changes, these components can also be used to deploy Anomaly Detection in a ThingWorx cluster.

What to Know at Installation

If you plan to use Anomaly Detection in ThingWorx cluster mode, the following configurations must be selected during installation of ThingWorx Analytics components:

• Analytics Server – When configuring the ThingWorx connection information, enable the option to let the installer automatically configure the AlertProcessingSubsystem for Anomaly Detection. In the graphical or text mode installation, the option is called Configure Anomaly Detection to use this instance of Analytics Server. In text mode, the parameter is called AUTO_CONFIG_ALERT_SUBSYSTEM

• Platform Analytics – When selecting components to install, include Property Transform Microserver and the subcomponent under it called Integration with Analytics Server for Anomaly Detection. Later in the installation, you will be prompted for Analytics Server connection information, including the IP address or host name, the port number for the Async microserver, and the API Key that was automatically generated at the end of the Analytics Server installation.

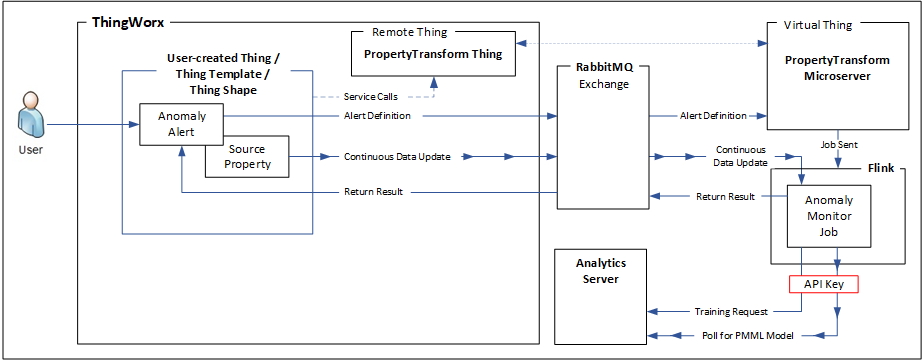

Components

Anomaly Detection architecture, in a ThingWorx cluster includes the components described below. For information about how the components interact, see Process Flow.

• PropertyTransform Microserver – This microserver subscribes to the metadata queue of the RabbitMQ message exchange. It receives notifications of changes to anomaly alerts on user-created Things, Thing Templates, or Thing Shapes, and is responsible for starting and stopping anomaly monitor jobs in the external Apache Flink processing engine.

During installation of the PropertyTransform microserver, a Java service starts and spins up a Virtual Thing. The Virtual Thing contacts ThingWorx and creates a corresponding Remote Thing, called PropertyTransformThing.

• PropertyTransform Thing – The Remote Thing is an extension of the corresponding microserver. You can see it in your list of Things in ThingWorx but you only need to interact with it to verify installation. When a call is made to create an anomaly alert, the Remote Thing is contacted behind the scenes to validate that the PropertyTransform microserver is connected.

• User-created Thing/Thing Template/Thing Shape – When you create an anomaly alert on a Thing, Thing Template, or Thing Shape, you can define the parameters of the alert, including the outbound anomaly rate, minimum data collection time, and certainty. Changes to the alert are written to the RabbitMQ message exchange and are then read by the PropertyTransform microserver.

Subsequent updates to source property data are sent continuously to the Flink anomaly monitor job, via a RabbitMQ exchange.

• Flink – Apache Flink is a stream processing engine external to ThingWorx. Flink manages the data stream and performs the calculations based on the user-provided alert definition. To support the streaming process, Flink communicates through the RabbitMQ message exchange, both to receive data and to return computation results.

• RabbitMQ Exchange – This external message broker handles event messaging between the Anomaly Detection components. ThingWorx passes anomaly alert definitions to the PropertyTransform Microserver over the RabbitMQ exchange. It also continuously passes updated data from the source property in ThingWorx to the Flink anomaly monitor job. Flink returns calculation results, via a RabbitMQ result queue, to the anomaly alert in ThingWorx. Each Thing that includes an anomaly alert has its own result queue and calculation results are written to the appropriate queue.

• Analytics Server – Anomaly Detection uses PMML models to determine if incoming data points are anomalous. These PMML models are trained and stored by Analytics Server. Anomaly monitor jobs communicate with Analytics Server to orchestrate this process.

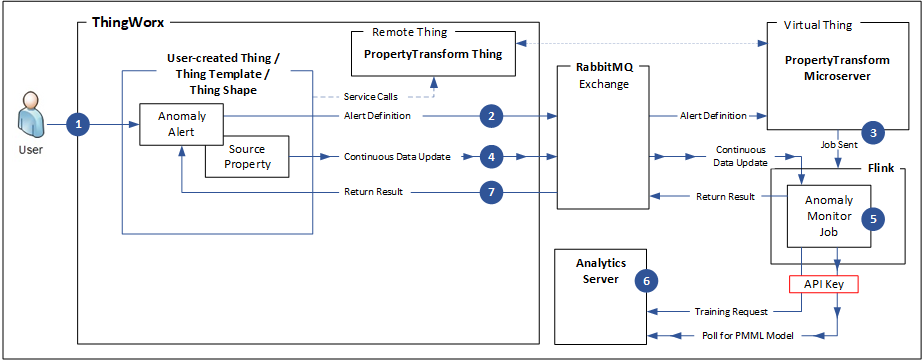

Process Flow

1. When you add an anomaly alert to a Thing, Thing Template, or Thing Shape in ThingWorx, you define various aspects of the alert. This alert definition includes information about the outbound anomaly rate, minimum data collection time, and certainty.

2. The alert definition is sent through the RabbitMQ exchange to the PropertyTransform microserver.

3. When the microserver receives the property definition, it starts a Flink anomaly monitor job.

4. Meanwhile, when any changes occur on a source property that includes an anomaly alert, ThingWorx publishes the updates to the RabbitMQ exchange. RabbitMQ continuously sends the updated data to Flink for use in anomaly monitor jobs.

5. Flink monitors the incoming data and performs the anomaly monitor calculations according to the alert definition.

6. When enough data has been collected, the anomaly monitor job on Flink sends a REST training request to Analytics Server. Analytics Server creates and stores a PMML model. The anomaly monitor job polls Analytics Server until it receives a PMML model to use in its calculations.

The communication between anomaly monitor jobs on Flink and the Analytics Server APIs is the reason an API Key is required during Platform Analytics installation. |

7. The computation results are returned, via RabbitMQ, from Flink to the anomaly alert in ThingWorx. If the results indicate that a recent data point is anomalous, an alert can be triggered.