Installing and Configuring a Cluster Windchill Environment

This section provides detailed information for installing and configuring a cluster Windchill system with attention to file vault management and data loading.

Server Cluster Configuration Overview

A cluster is a collection of computers that can be accessed independently or as a single unit. The cluster can take a single work unit, defined as either an HTTP request or RMI method call, and distribute it to one of many Windchill servers.

The main advantages of a cluster over a single server are increased performance, scalability, and reliability. Performance increases because Windchill server processes have less competition on a given node. The system is scalable. As the load increases, additional Windchill servers can be added. This scalability also provides a more reliable system. When a Windchill server fails, requests can be directed to the remaining servers.

Configuring a Typical Server Cluster

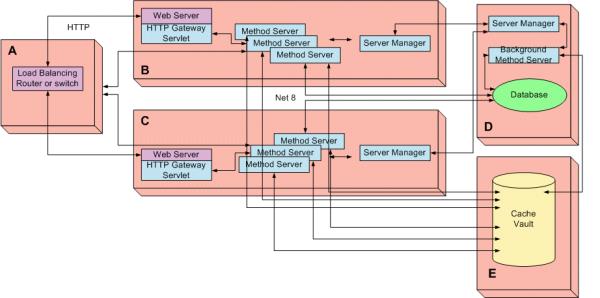

The figure that follows shows a typical cluster configuration. In this figure, five computers (A, B, C, D, and E) work together to increase performance, scalability, and reliability.

A typical Windchill cluster consists of three segments:

• A load-balancing router (A)

• A persistence storage server or main cache (D)

• One or more additional Windchill servers or cache clients (B and C)

As shown in the diagram, the Windchill cluster interacts with a server where the physical vault is maintained (E). Setting up the Windchill interaction with a file server is not discussed in this set of topics. For setup details, see File Server Administrator.

The load balancing router takes requests from clients and distributes them among one of the many Windchill servers. To the rest of the network, this router appears as a single Windchill server. For information on the load balancer, see the section Configuring a Load Balancing Router.

Windchill servers that participate in a cluster are similar to a simple autonomous Windchill server except that they share a database and caching mechanism with other members of the cluster. Since caches are subject to updates from a top-level main cache, these server nodes are also known as cache clients. They share a common DNS name to direct clients to the cluster instead of the individual Windchill server for subsequent requests.

The persistence storage server machine contains the relational database as well as the main Windchill cache and background method servers. For any deployment, a single database is used to store persistent data for the entire cluster even when the database is clustered. For a clustered deployment, a separate server manager must be deployed and designated as the “Main Cache”. This server manager has the responsibility of synchronizing the caches across all client caches.

The server-manager process on each node in the cluster manages the caching. In the previous figure, the server-manager running on the persistence storage server also serves as the cluster main cache. Additional properties must be set in the wt.properties file to properly configure the distributed caching mechanism.

Use the xconfmanager utility to edit Windchill properties files. For details, see About the xconfmanager Utility. |

In a cluster system, you can create a representation using files from your local disk through a create representation window. For more information, see Viewing Representations. |

Main Server Manager Fail Over

At any given point in time, there is a single cache main server manager. By default, this main server manager is initially selected through negotiation with other server managers. Generally, this is the server manager on the first cluster node that is started. After the cluster selects a main cache node, this server manager remains the main cache until it becomes unreachable. If this occurs, another server manager automatically becomes the main server manager. After a switch in main cache nodes, if the previous main server manager becomes reachable, it reverts to a secondary cache status.

Configuring a Load Balancing Router

The load balancing router takes requests from clients and distributes them among the available Windchill servers. This router may also act as a firewall, separating the Windchill cluster from the rest of the network. To the rest of the network, this router appears as a single Windchill server.

The load balancer configuration in the previous description refers to a particular type of load balancer—the Alteon load balancer. Instructions may differ for different load balancers. In addition, the load balancer in the PDS Reference Architecture only balances traffic to web servers. Since the PDS Reference Architecture implements a split web server system, the load balancing of traffic from the web servers to the servlet engine and the method servers is handled using AJP13 load balancing. |

Configuring Affinity

End-users typically access a Windchill cluster through a load balancing router. The load balancing router dispatches their requests across several machines in an attempt to distribute the workload evenly. However, configuring Affinity within the load balancer can take advantage of the servlet engine session cache and processing multi-step operations. This configuration directs subsequent related requests to the same servlet engine and Method Server for processing.

The load balancing router must load balance any and all TCP traffic and IP traffic that is to be allowed across this boundary. Thus, if you limit the traffic to HTTP or HTTPS (tunneling RMI over HTTP or HTTPS, etc), then you only need to have a load balancing router that handles HTTP or HTTPS. If you allow other TCP communication and IP communication across this boundary, such as direct RMI traffic, then it too must be load balanced.

The method servers have no additional affinity requirements when tunneling RMI over HTTP or HTTPS. However, the load balancer must still properly enforce affinity for HTTP and HTTPS traffic which will include tunneled traffic. If direct RMI ports are load balanced by the routers, then the load balancer must enforce affinity for these ports. Since RMI is not a protocol that can handle cookies or other tags for affinity purposes, this usually will be enforced using client host affinity over a period of time.

Windchill also has affinity requirements of its servlet engines however, and requires either client host or session affinity. Client host affinity maps all requests from a given host during a given time period to the same servlet engine JVM, thus ensuring that all session data from the host remains with that JVM. Session affinity is more precise and is the best match for what servlet engines actually require.

Whether the IP load balancing router is responsible for ensuring this affinity depends on your architecture. For example, you could have an IP load balancing router balancing between several machines, each running an Apache-based web server with mod_jk and load balancing over all servlet engines on all of the machines. If you do this correctly, then mod_jk ensures session affinity and your IP load balancer does not have to ensure any affinity. However, incoming requests are likely to be directed to an arbitrary web server for initial processing. The requests are then directed to a servlet engine on a different machine for subsequent processing.

Alternatively, you could use an Apache-based web server and mod_jk as your load balancer, having just one web server on a "load balancer" machine and having it send out requests to the servlet engines on the cluster nodes. This approach requires that all non-HTTP(S) traffic be tunneled over HTTP(S).

The easiest approach is to configure the load balancing router with host affinity for all TCP connections and IP connections. This ensures that all requests from a specific client host will, during a specific period of time, be handled by the same node of the cluster.

Configurations vary widely depending on load balancing software and hardware. This set of topics only discusses configuration for the Windchill server systems. |

Determining the Necessary Ports for Load Balancer or Firewall

Client systems typically access a Windchill cluster server environment through a load balancing router. Due to security concerns or limitation of ports, you need to determine which TCP ports and IP ports must be open for the load balancer, when a cluster environment integrates Workgroup managers and Windchill visualization.

The requirements on the communication between client and server are complicated by the many possible communication environments. To simplify the requirement of ports for the communication between client and server, this discussion makes the following assumptions:

• There is only one line of communication between the client site and server site.

• The client site consists only of HTTP clients and HTTPS clients.

• All Windchill server components, such as web server, servlet engine, LDAP, and Oracle are in the server site.

• A single load balancer and firewall has been set up between the client and server sites.

If you tunnel RMI over HTTP or HTTPS, then only HTTP or HTTPS ports need to be open on the load balancer. Otherwise, the ports that need to be open depend on the customer environment:

• For out-of-the-box core Windchill, the following ports must be open:

◦ HTTP or HTTPs ports

◦ wt.manager.port and wt.method.minPort through wt.method.maxPort defined in wt.properties

• For workgroup managers, additional ports need to be open depending on the specific product. The Windchill Workgroup Managers for CADDS5 and CATIA V4 uses a Registry Server (RS) that runs along with Windchill servers. The Registry Server uses 2 ports. Each time the Registry Server is started, it uses the port specified in the registryserver.ini file and a different, random port. Access must be granted on both ports for the Windchill workgroup managers to function.

Use the "registry_port" defined in registryclient.ini and registryserver.ini under <Windchill>/codebase/cfg/site to manually assign the ports.

The unified Workgroup Manager framework, used by the Workgroup Manager for Creo Parametric and most other workgroup managers, is a regular HTTP client. Therefore, the server configurations that apply to all HTTP clients (for example, web browsers) apply to Workgroup Manager for Creo Parametric and most other workgroup managers.

For details on the ports set through the PTC Solution Installer (PSI), see Prerequisite Checklist.

Configuring the Windchill Environment for Clustering

PTC recommends two classes of cluster configurations depending on individual needs. In the first class of cluster configuration is where every node in the cluster is identical. Each node in the cluster can negotiate to become a main cache node. This type of cluster is referred to as identical nodes cluster. In the second class of cluster configuration is where separate secondary and main nodes are configured. This allows for a possibility of separate dedicated main cache and dedicated foreground secondary cache nodes. This type of cluster is referred to as a dedicated mains cluster.

All cluster nodes of Windchill must be configured similarly so that any one of them can respond to the same request with the same response. Clients access this system as single server though there are several servers responding to the requests in the background.

To configure a Windchill environment for clustering, review the following sections and complete the instructions in the sections as they apply to your environment:

Configuring an Identical Node Cluster

Configuring a Cluster with a Dedicated Main Node

Configuring Windchill Adapters in a Cluster Configuration (Optional)

If you are considering configuring high availability queue processing, see Configuring the Worker Agent in a High Availability Windchill Cluster for more information. |

Configuring an Identical Node Cluster

In the example configuration, all cluster nodes are configured identically. A single cluster node that is properly configured can be synchronized to other nodes and can perform within the cluster without any modifications.

The configuration requires that all HTTP access goes through the main IP load balancer. The following Example Properties section lists the minimum configuration properties. Detailed descriptions of the properties can be found in the section Understanding Properties Used in Examples.

Example Properties

All cluster nodes require the following properties (found in the wt.properties file):

wt.rmi.server.hostname=A

wt.cache.main.secondaryHosts=B, C, D

wt.cache.main.secondaryHosts=B, C, D

Configuring a Cluster with a Dedicated Main Node

In the example configuration, all cluster nodes are configured identically. However, only specific nodes are allowed to become a cluster node. A single cluster node that is properly configured can be synchronized to other nodes and can perform within the cluster without any modifications.

The configuration requires that all HTTP access goes through the main IP load balancer. The following Example Properties section lists the minimum configuration properties. Detailed descriptions of the properties can be found in the section Understanding Properties Used in Examples.

Example Properties

All cluster nodes require the following properties (found in the wt.properties file):

wt.rmi.server.hostname=A

wt.cache.main.secondaryHosts=B, C, D

wt.cache.main.hostname=D

wt.cache.main.secondaryHosts=B, C, D

wt.cache.main.hostname=D

In the example diagram, only host D is allowed to be a the main cache. If you have multiple hosts that you want to allow as the main cache, those host names can be added to the wt.cache.main.hostname property value.

Understanding Properties Used in Examples

The following table lists the properties used in the previous examples and describes their use:

Property | Description |

|---|---|

wt.rmi.server.hostname=A | Each node in the cluster must act as if it were the cluster in its entirety. A request to an individual node in the cluster needs to generate the same response as every other node in the cluster. Set the local machine host lookup to resolve the common name to the current node. The following entry should be added in the server host file (UNIX host file is /etc/hosts; Windows host file is <WNNT>/system32/drivers/etc/hosts): 127.0.0.1 A For clients to access these nodes as a single-server cluster, the RMI host name must be set to the commonly known name for the entire cluster, and in this case host A. This property should be set during installation. |

wt.cache.main.secondaryHosts=B, C, D | This property is required on all cluster nodes to allow the JMX management components to communicate from each node. The property also tells the Windchill cluster nodes that hosts in this property are trusted and allowed to access the secondary node. |

wt.cache.main.hostname=D | This property defines the host names that are allowed to become a main cache. When this property is not defined, any host in the cluster can become a main cache. When set, this property must be set on all cluster nodes. |

Configuring Windchill Adapters in a Cluster Configuration (Optional)

A Windchill adapter is automatically configured for foreground method servers running on the Windchill secondary cache nodes. A single Windchill adapter is configured during the installation phase. Whenever a Windchill adapter starts, its configured port range is compared to the port range used by the Windchill RMI (as configured by the wt.method.minPort and wt.method.maxPort wt.property values). If the configured port range is smaller than the one in use by Windchill RMI, then it automatically increases to match in size.

For more information, see the section Windchill Adapter for complete details on configuring the Windchill adapter.

Configuring Method Server and Background Method Servers on Cluster Nodes

There is no restriction on how many MethodServer and BackgroundMethodServer instances are allowed on each cluster node. In a cluster with a dedicated main node, PTC recommends that background queue processing be dedicated to BackgroundMethodServer instances on the main nodes. For more information, see the following sections:

• For configuring additional MethodServer instances,refer to the section Configuring Windchill Properties for Multiple Method Servers under Advanced Windchill Configuration.

• For configuring background method servers, refer to the section Configuring Background Method Servers under Configuring Background Method Servers.