Integration to External Systems

Support for Outbound Data Flow

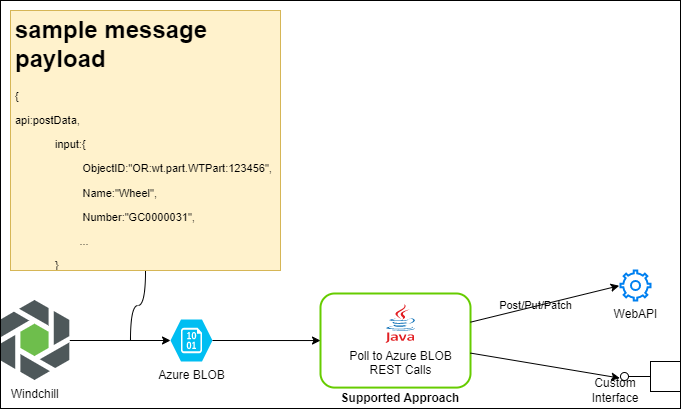

With Windchill ESI installed, Windchill supports API with the ESI module to allow outbound data flow. If Windchill server is configured to store content on an Azure storage account using the Blob Configuration tool, and the property esi.azure.storage.container is set to the appropriate container name via wt.properties, it is possible to write text to a blob within that container. Here are details of how this API can be used.

Package: com.ptc.windchill.esi.utl

Class: ESIBlobUtility

Method: writeTextToAzureBlob

Parameters: String blobNameExcludingContainerName, String text

Returns: true if the number of bytes written is more than 0

Network Requirements

• If Windchill deployment is on-prem, ensure that the storage account network config has the egress IP / CIDR for Windchill added to allow-list.

• For a PTC managed Windchill deployment, this is already handled.

One can use Windchill supported API to send a JSON message to be processed for integration by invoking this API. This message can further be read from Azure BLOB and processed for further API call or other integration

Sample Application to Download ERP Response Files from Azure

Windchill now supports storing ESI response files into Azure Blobs. Refer to the sample application to download the ESI response files securely from Azure for further downstream processing. Windchill stores the ESI response files in the pre-configured Azure Blob Containers. The intent of this sample application is to check for and download the new files stored in the container when Windchill objects are published to File or MES type of distribution targets.

1. Sample code: ESIResponseDownloader.java:

/* bcwti

*

* Copyright (c) 2023 Parametric Technology Corporation (PTC). All Rights Reserved.

*

* This software is the confidential and proprietary information of PTC

* and is subject to the terms of a software license agreement. You shall

* not disclose such confidential information and shall use it only in accordance

* with the terms of the license agreement.

*

* ecwti

*/

import java.io.File;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.StandardOpenOption;

import java.util.ArrayList;

import java.util.List;

import com.azure.storage.blob.BlobClient;

import com.azure.storage.blob.models.BlobItem;

import com.azure.storage.blob.BlobContainerClient;

import com.azure.storage.blob.BlobContainerClientBuilder;

import com.azure.storage.blob.specialized.BlobInputStream;

/*

* Class ESIResponseDownloader is used to download blob contents from an azure storage account.

* Provided we have container sas URL from which we need to download the blob contents.

*/

public class ESIResponseDownloader {

public static void main(String[] args) {

String sasURL = System.getenv("CONTAINER_LEVEL_SAS_URL");

String strFetchIntervalInSeconds = System.getenv("FETCHING_INTERVAL_IN_SECONDS");

Long fetchingIntervalInMS = Long.parseLong(strFetchIntervalInSeconds !=null ? strFetchIntervalInSeconds : "300")*1000;

//Get endpoint, containerName and sasToken from SAS URL

String[] urlElements = sasURL.split("/");

// get https://storageaccount.blob.windows.core.net as endpoint

String endPoint = String.join("/", urlElements[0], urlElements[1], urlElements[2], urlElements[3]);

String[] urlElements2 = urlElements[3].split("\\?", 2);

String containerName = urlElements2[0];

String sasToken = urlElements2[1];

//download ESI blobs

while (true) {

try {

downloadESIBlobs(endPoint, containerName, sasToken);

} catch (Exception e) {

System.err.println("Caught an exception! - " + e.getLocalizedMessage() + "\nExiting..");

}

try {

Thread.sleep(fetchingIntervalInMS);

} catch (Exception e) {

System.err.println(e.getLocalizedMessage());

}

}

}

/*

* This method will check wheter the files present locally (at path_to_download)

* match list of blob names in a specific folder within container in the storage account or not. If not it

* downloads it locally if the extension of

* file is xml or json.

*

* @param storageAccountUrl Storage account url

* @param containerName Container Name

* @param sasToken SAS Token

*

*/

public static void downloadESIBlobs(String storageAccountUrl, String containerName, String sasToken) {

final String pathToDownload = System.getenv("PATH_TO_DOWNLOAD");

final String logFile = System.getenv("LOG_FILE");

String basePath = Paths.get(pathToDownload, containerName).toString();

// store the list of all blob names present in the esi container

List<String> blobs = listContainerBlobs(storageAccountUrl, containerName, sasToken);

// store the list of all the files present locally

List<String> localFiles = readFromLogFile(logFile);

blobs.forEach(blob -> {

// Check if the blob is already present, if not download it

if(!localFiles.contains(blob)) {

System.out.println(blob + " not present, downloading to " + basePath);

downloadBlob(storageAccountUrl, containerName, blob, sasToken, basePath);

writeToLogFile(blob, logFile);

}

});

}

/*

* This method will return a list of names of all the blobs (with file extension json

* or xml) present in the given container.

* If SUB_FOLDER_PATH environment variable is set then it will look for the

* blobs specific to that folder inside that

* container.

*

* @param storageAccountUrl Storage account url

* @param containerName Container Name

* @param sasToken SAS Token

* @return List of names (full path) of all the blobs present in the container

*/

public static List<String> listContainerBlobs(String storageAccountUrl, String containerName, String sasToken) {

String subFolderPath = System.getenv("SUB_FOLDER_PATH");

List<String> blobList = new ArrayList<>();

BlobContainerClient blobContainerClient = getBlobContainerClient(storageAccountUrl, containerName, sasToken);

for (BlobItem blob : blobContainerClient.listBlobs()) {

if (subFolderPath != null && blob.getName().startsWith(subFolderPath)

&& (blob.getName().endsWith(".xml") || blob.getName().endsWith(".json"))) {

blobList.add(blob.getName());

} else if (subFolderPath == null && (blob.getName().endsWith(".xml") || blob.getName().endsWith(".json"))) {

blobList.add(blob.getName());

}

}

return blobList;

}

/*

* This method reads the log file line by line (whenever a file is downloaded it

* is logged in log file)

* Thus, this method returns the list of names of files present locally.

*

* @param filePath Path to the log file present locally

* @return Returns a list of names of files present locally

*

*/

private static List<String> readFromLogFile(String filePath) {

if (!(new File(filePath).exists())) {

try {

Files.createFile(Paths.get(filePath));

} catch (IOException e) {

e.printStackTrace();

}

}

try {

return Files.readAllLines(Path.of(filePath));

} catch (IOException e) {

e.printStackTrace();

}

return new ArrayList<>();

}

/*

* This method will download Azure blob based on the blob name passed in parameter along with its container name,

* storage account url and SAS token.

* Blob will be downloaded to the blobAbsolutePath and if parentDirectory is not present, it will be created.

*

* @param storageAccountUrl Storage Account Url

* @param containerName Container Name

* @param blobName Full name of the blob which needs to be downloaded

* @param sasToken SAS token

* @param basePath Folder under which we need to download blob contents

*

*/

public static void downloadBlob(String storageAccountUrl, String containerName, String fullBlobName,

String sasToken, String basePath) {

//Creating a blob client to open a Blob Input Stream

BlobClient blobClient = getBlobClient(storageAccountUrl, containerName, fullBlobName, sasToken);

try (BlobInputStream blobIS = blobClient.openInputStream()) {

String blobAbsolutePath = Paths.get(basePath, fullBlobName).toString();

//Check if Parent Directory of Blob (inside the container) is present locally or not. If not create it.

if (!(new File(blobAbsolutePath).exists()))

Files.createDirectories(Paths.get(blobAbsolutePath).getParent());

//Write the blob content to the absolute path locally.

Files.write(Paths.get(blobAbsolutePath), blobIS.readAllBytes());

} catch (Exception e) {

e.printStackTrace();

}

}

/*

* This method writes full blob name to the log file

*

* @param fullBlobName Full blob name

* @param filePath File Path to the log file

*/

private static void writeToLogFile(String fullBlobName, String filePath) {

try {

Files.write(Path.of(filePath), (fullBlobName + System.lineSeparator()).getBytes(StandardCharsets.UTF_8),

StandardOpenOption.APPEND);

} catch (IOException e) {

e.printStackTrace();

}

}

/*

* This method will get the blob container client which is used for performing

* azure container related operations

*/

public static BlobContainerClient getBlobContainerClient(String storageAccountUrl, String containerName,

String sasToken) {

return new BlobContainerClientBuilder()

.endpoint(storageAccountUrl)

.sasToken(sasToken)

.containerName(containerName)

.buildClient();

}

/*

* This method will get the blob client which is used for performing blob

* related operations

*/

public static BlobClient getBlobClient(String storageAccountUrl, String containerName, String blobName,

String sasToken) {

return getBlobContainerClient(storageAccountUrl, containerName, sasToken).getBlobClient(blobName);

}

}

*

* Copyright (c) 2023 Parametric Technology Corporation (PTC). All Rights Reserved.

*

* This software is the confidential and proprietary information of PTC

* and is subject to the terms of a software license agreement. You shall

* not disclose such confidential information and shall use it only in accordance

* with the terms of the license agreement.

*

* ecwti

*/

import java.io.File;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.StandardOpenOption;

import java.util.ArrayList;

import java.util.List;

import com.azure.storage.blob.BlobClient;

import com.azure.storage.blob.models.BlobItem;

import com.azure.storage.blob.BlobContainerClient;

import com.azure.storage.blob.BlobContainerClientBuilder;

import com.azure.storage.blob.specialized.BlobInputStream;

/*

* Class ESIResponseDownloader is used to download blob contents from an azure storage account.

* Provided we have container sas URL from which we need to download the blob contents.

*/

public class ESIResponseDownloader {

public static void main(String[] args) {

String sasURL = System.getenv("CONTAINER_LEVEL_SAS_URL");

String strFetchIntervalInSeconds = System.getenv("FETCHING_INTERVAL_IN_SECONDS");

Long fetchingIntervalInMS = Long.parseLong(strFetchIntervalInSeconds !=null ? strFetchIntervalInSeconds : "300")*1000;

//Get endpoint, containerName and sasToken from SAS URL

String[] urlElements = sasURL.split("/");

// get https://storageaccount.blob.windows.core.net as endpoint

String endPoint = String.join("/", urlElements[0], urlElements[1], urlElements[2], urlElements[3]);

String[] urlElements2 = urlElements[3].split("\\?", 2);

String containerName = urlElements2[0];

String sasToken = urlElements2[1];

//download ESI blobs

while (true) {

try {

downloadESIBlobs(endPoint, containerName, sasToken);

} catch (Exception e) {

System.err.println("Caught an exception! - " + e.getLocalizedMessage() + "\nExiting..");

}

try {

Thread.sleep(fetchingIntervalInMS);

} catch (Exception e) {

System.err.println(e.getLocalizedMessage());

}

}

}

/*

* This method will check wheter the files present locally (at path_to_download)

* match list of blob names in a specific folder within container in the storage account or not. If not it

* downloads it locally if the extension of

* file is xml or json.

*

* @param storageAccountUrl Storage account url

* @param containerName Container Name

* @param sasToken SAS Token

*

*/

public static void downloadESIBlobs(String storageAccountUrl, String containerName, String sasToken) {

final String pathToDownload = System.getenv("PATH_TO_DOWNLOAD");

final String logFile = System.getenv("LOG_FILE");

String basePath = Paths.get(pathToDownload, containerName).toString();

// store the list of all blob names present in the esi container

List<String> blobs = listContainerBlobs(storageAccountUrl, containerName, sasToken);

// store the list of all the files present locally

List<String> localFiles = readFromLogFile(logFile);

blobs.forEach(blob -> {

// Check if the blob is already present, if not download it

if(!localFiles.contains(blob)) {

System.out.println(blob + " not present, downloading to " + basePath);

downloadBlob(storageAccountUrl, containerName, blob, sasToken, basePath);

writeToLogFile(blob, logFile);

}

});

}

/*

* This method will return a list of names of all the blobs (with file extension json

* or xml) present in the given container.

* If SUB_FOLDER_PATH environment variable is set then it will look for the

* blobs specific to that folder inside that

* container.

*

* @param storageAccountUrl Storage account url

* @param containerName Container Name

* @param sasToken SAS Token

* @return List of names (full path) of all the blobs present in the container

*/

public static List<String> listContainerBlobs(String storageAccountUrl, String containerName, String sasToken) {

String subFolderPath = System.getenv("SUB_FOLDER_PATH");

List<String> blobList = new ArrayList<>();

BlobContainerClient blobContainerClient = getBlobContainerClient(storageAccountUrl, containerName, sasToken);

for (BlobItem blob : blobContainerClient.listBlobs()) {

if (subFolderPath != null && blob.getName().startsWith(subFolderPath)

&& (blob.getName().endsWith(".xml") || blob.getName().endsWith(".json"))) {

blobList.add(blob.getName());

} else if (subFolderPath == null && (blob.getName().endsWith(".xml") || blob.getName().endsWith(".json"))) {

blobList.add(blob.getName());

}

}

return blobList;

}

/*

* This method reads the log file line by line (whenever a file is downloaded it

* is logged in log file)

* Thus, this method returns the list of names of files present locally.

*

* @param filePath Path to the log file present locally

* @return Returns a list of names of files present locally

*

*/

private static List<String> readFromLogFile(String filePath) {

if (!(new File(filePath).exists())) {

try {

Files.createFile(Paths.get(filePath));

} catch (IOException e) {

e.printStackTrace();

}

}

try {

return Files.readAllLines(Path.of(filePath));

} catch (IOException e) {

e.printStackTrace();

}

return new ArrayList<>();

}

/*

* This method will download Azure blob based on the blob name passed in parameter along with its container name,

* storage account url and SAS token.

* Blob will be downloaded to the blobAbsolutePath and if parentDirectory is not present, it will be created.

*

* @param storageAccountUrl Storage Account Url

* @param containerName Container Name

* @param blobName Full name of the blob which needs to be downloaded

* @param sasToken SAS token

* @param basePath Folder under which we need to download blob contents

*

*/

public static void downloadBlob(String storageAccountUrl, String containerName, String fullBlobName,

String sasToken, String basePath) {

//Creating a blob client to open a Blob Input Stream

BlobClient blobClient = getBlobClient(storageAccountUrl, containerName, fullBlobName, sasToken);

try (BlobInputStream blobIS = blobClient.openInputStream()) {

String blobAbsolutePath = Paths.get(basePath, fullBlobName).toString();

//Check if Parent Directory of Blob (inside the container) is present locally or not. If not create it.

if (!(new File(blobAbsolutePath).exists()))

Files.createDirectories(Paths.get(blobAbsolutePath).getParent());

//Write the blob content to the absolute path locally.

Files.write(Paths.get(blobAbsolutePath), blobIS.readAllBytes());

} catch (Exception e) {

e.printStackTrace();

}

}

/*

* This method writes full blob name to the log file

*

* @param fullBlobName Full blob name

* @param filePath File Path to the log file

*/

private static void writeToLogFile(String fullBlobName, String filePath) {

try {

Files.write(Path.of(filePath), (fullBlobName + System.lineSeparator()).getBytes(StandardCharsets.UTF_8),

StandardOpenOption.APPEND);

} catch (IOException e) {

e.printStackTrace();

}

}

/*

* This method will get the blob container client which is used for performing

* azure container related operations

*/

public static BlobContainerClient getBlobContainerClient(String storageAccountUrl, String containerName,

String sasToken) {

return new BlobContainerClientBuilder()

.endpoint(storageAccountUrl)

.sasToken(sasToken)

.containerName(containerName)

.buildClient();

}

/*

* This method will get the blob client which is used for performing blob

* related operations

*/

public static BlobClient getBlobClient(String storageAccountUrl, String containerName, String blobName,

String sasToken) {

return getBlobContainerClient(storageAccountUrl, containerName, sasToken).getBlobClient(blobName);

}

}

2. Set the following environment variables, and then build and run the project.

export PATH_TO_DOWNLOAD= <path to download>

export LOG_FILE= <path to log file>

export FETCHING_INTERVAL_IN_SECONDS= <time to rescan and fetch new files>

export SUB_FOLDER_PATH= <specific folder inside the container>

The following value is to be provided by PTC:

export CONTAINER_LEVEL_SAS_URL= <Shared Access Signature URL for that container>

This sample depends on azure-storage-blob library. |